Artificial Intelligence (AI) technologies have played a vital role in responding to the COVID-19 crisis. AI advancement also has the potential to support the global pursuit of Sustainable Development Goals (SDGs). In developed economies, AI has already been widely adopted into businesses and hospitals. However, as AI grows, emerging challenges should be addressed globally to prevent potential misuse. The G20 community should lead the development and enforcement of AI Principles toward establishing a globally standardized AI policy that may regulate AI’s use among countries without negatively affecting the growth and positive potentials of the AI industry.

Challenge

While AI demonstrated great potential during the pandemic, there is growing concern over public trust on AI as the technology remains relatively unknown. Apart from the lack of empathy and ethical considerations in its execution, there is a risk of AI taking up jobs and creating widespread unemployment. Digital divide is also worrisome for a significant population, owing to either affordability or ICT infrastructure inadequacies.

Furthermore, misuse of AI such as the emergence of deep fake videos (an AI-generated video that imitates the expression and voice of a particular person) can even lead to identity thefts (Cervantes, 2021). Some AI based softwares are also racially or otherwise biased, reflecting structural biases in society. AI amplifies negative biases in human behavior with its feedback loop, potentially creating a “monster.” Thus, crucial decision making by AI may not always be ideal.

In general, AI can present the following challenges.

- Datasets on which AI relies, reflect human biases. Racial, gender, age, communal or moral, and other socio-economic factors affect conclusions made by AI. Additionally, deep-learning technology used for business decisions is opaque; AI does not provide associative insights or conclusions. Instead, AI amplifies existing biases by automating tasks. This can extend to creating security flaws and identity, or internet and power failures. AI increases the potential scale of bias where a flaw could affect millions of people, exposing companies to class-action lawsuits.

- Computing power is another requirement for processing massive volumes of data for AI. Integrating AI with technologies requires that current programs are compatible with, and does not hamper existing output. However, other challenges include the need for data storage, handling, training, and even costly infrastructure in transition.

- Data management and handling is another key challenge. Sensitivity of data collected by AI may threaten privacy, ethical guidelines, or even national or international law (Challenges of AI, 2020). Its implementation requires clearly defined strategies with a monitoring and feedback mechanism, failing which can have dire consequences.

AI algorithms maintain secrecy to prevent security breaches resulting in poor transparency. A lack of governance process also results in poor accountability of any unethical practices. Paradoxically, a large workforce is required for data annotation/labelling, as the quality and correct labelling of data is a prerequisite for AI systems to draw correct inferences. Access to massive training datasets is another requirement; the quality of data with respect to its aspects including consistency, integrity, accuracy, and completeness is important. AI must be adept to work despite data insufficiencies (Abdoullaev, 2021).

Proposal

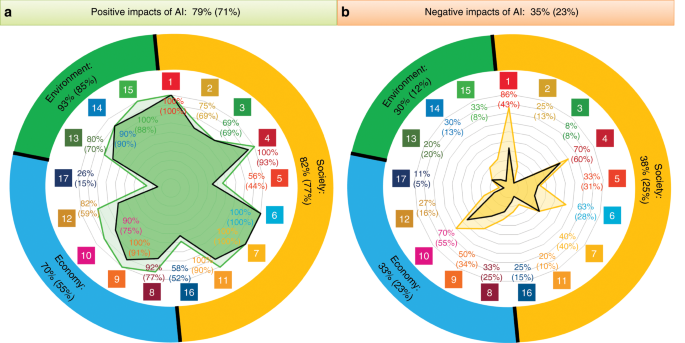

Artificial Intelligence (AI) has a wide potential to replace systems that work by identifying, sifting through, and analysing large amounts of data to interpret patterns and automating processes (Toplic, 2020). AI can amplify ongoing work as per the United Nations’ 17 Sustainable Development Goals (SDGs). AI-based technologies could enhance 82 percent of SDG’s societal outcome metrics, especially on the SDG 1 on poverty, SDG 4 on quality education, SDG 6 on clean water and sanitation, SDG 7 on affordable and clean energy, and SDG 11 on sustainable cities (Vinuesa, et al., 2020). AI can also improve climate change understanding and support low-carbon energy systems (i.e., some of the many ways of improving performance of SDGs) (Reilly, 2021). Technological advancements by AI provide a positive impact on achieving 70 percent (42 targets) of SDGs, while showing a negative impact on achieving 33 percent (20 targets) of SDGs (Vinuesa, et al., 2020).

Source: (Vinuesa, et al., 2020)

A net positive impact of AI-enabled technologies is associated with increased productivity, while the negative impacts are mainly related to increased inequalities (Acemoglu & Restrepo, 2018).

AI-based management solutions are assisting with end-to-end visibility in utilizing opportunities; the digital transformation across various industrial supply chains is quite visible. According to a PWC research, 52 percent of companies accelerated their AI adoption plans during the pandemic while 86 percent say that AI has become a mainstream technology in their company. In pandemic response, AI can assist in crisis management and amelioration in different stages—from early infection detection, spread prevention, vaccine and cure development, recovery monitoring, fighting misinformation, even supporting the economy to operate virtually (Using artificial intelligence to help combat Covid-19, 2020).

What is happening around the world: few examples within the G20 community

India: The South Asian giant’s AI industry is set to grow by 1.5 times in 2021 compared to 2019, with expected industry value of USD 7.8 billion by 2025 with a compounded annual growth rate (CAGR) of 20.2 percent. It is the third largest AI market in the Asia-Pacific with 46 percent of Indian enterprises citing innovation as the key driver of AI investment (Jayadevan, 2021). National Institution for Transforming India (NITI Aayog), a policy think tank of the Government of India, recently passed 30 recommendations to pursue the acceleration of India’s AI growth (Future Networks (FN) Division, Telecommunication Engineering Center, 2020). Such a development greatly contributes toward SDG 9 on building resilient infrastructure.

Brazil: The Brazilian government has already launched the OECD’s five principles as their national AI strategy, a feat that should be followed by all G20 countries and the rest of the world to reach a uniform global AI regulatory standards. The government also extended the strategy to secure “qualifications for a digital future” to train teachers and students in a form of national literacy programme for AI. (Lowe, 2021). This greatly contributes to SDG 8 on improving economic growth and employment.

The Canadian government’s Covid-19 AI research grants produced projects such as medical imaging and prediction of disease severity upon infection, effectiveness of medicine, virus mutation and transmission, identification of at-risk population and mental health impacts contributing positively to SDG 3 on Health (Gordon, 2022). This is a grant area illustrating AI’s possibilities. Canadian company BlueDot used AI to detect the virus outbreak in Wuhan, China (How a Canadian start-up used AI to track China virus, 2020).

The US government promoted AI research by way of grants on AI’s application in pandemic. The National Security Commission researched on AI’s role in pandemic response support and made recommendations on application of AI in areas of situational awareness of disease surveillance and diagnostics, production of vaccines, therapeutics, and advancing medical equipment diagnostics to ongoing healthcare (SDG 3 Health). For instance, US’s DataRobot developed AI based models that predict Covid-19 spread to aid in policy decisions. AI-based robots are able to diagnose infection remotely, and are being tested in Boston hospitals (Gordon, 2022).

China explored new heights in AI-driven pandemic management—from 5G robots to thermal-camera-equipped drones. Medical care, public security, education and logistics are sectors benefiting from 5G applications. China achieved the largest number of AI products numbering over 500, which were used in the country to support pandemic crisis resolution; this included intelligent identification and R&D in drug development. Apart from pandemic handling, AI boosted industry performance and innovation during the pandemic. AI shows great promise in supporting human effort, and large-scale geographical coordination and collaboration (Yuyao, 2020). This contributes to both SDG 9 (infrastructure) by establishing a resilient infrastructure as well to SDG 3 (health) by improving the health sector as a whole.

Australia illustrated the use of AI in medical diagnosis. The affiliation between University of Sydney and a start-up DetectED-X developed a technology that proved helpful in improving the accuracy of breast cancer detection. This technology was attuned to detect Covid-19 using lung CT scans of patients. Furthermore, Google’s Deepmind was successful in predicting the protein structure of Covid-19 virus—the machine was later adopted by CSIRO’s Australian e-health research Centre to identify possible strains of virus on which vaccines can be tested (An AI Action Plan for All Australians, 2020) contributing positively to SDG 3.

G20 Perspectives and Practices

G20 Leaders welcomed G20 AI Principles drawn from OECD Recommendation on AI in 2019. The principles foster public confidence in AI to realize their potential by seeking digital inclusiveness, transparency, and accountability. In the 2020 Saudi G20 Leadership Summit, G20 Digital Ministers confirmed committed to advance the G20 AI Principles. The G20 economic ministers adopted a declaration, emphasizing the role of connectivity, digital technologies, and policies in accelerating G20’s collaboration and response to the Covid-19 pandemic.

Some examples include: India adopting inclusive growth as a focus to remove systematic biases, while promoting ethics and privacy in building an AI ecosystem. Germany’s AI strategy focuses on tech-benefits for people and environment, responsible societal development being a core objective and aims to achieve all five values-based G20 AI principles. There may be difficult national level initiatives on the basis of their priorities on principles. It may be beneficial and easier if an integrated principles-based system is developed for a reliable AI ecosystem (Box, 2020).

AI systems must be aligned with AI principles; purpose specification and protection against privacy infringement must be taken care of. Privacy protection must also reflect in AI-powered monitoring. G20 countries can collaborate to learn about the patterns followed by the pandemic and make policy decisions accordingly (Using artificial intelligence to help combat Covid-19, 2020).

G20 deepened discussions on implementation of AI principles in 2020. The role of digital technologies in supporting covid-19 pandemic management and related recovery of the economy was also debated by G20 countries. The key principles that were adopted can be summarised as follows.

- Right to know data, use, and privacy: The content, source, and purpose of the data used must be made available to all parties, who have the right to know the ‘how, what, and where’ of data. Data must be accurate, and compliant with privacy protection.

- Quality and accuracy of data: is critical in ensuring optimum decision making. Many levels of reviews, not just initially but on a regular basis, are important in weeding out any potential flaws emerging from data or algorithm flaws.

- Human-focused: Human control must be exercised; the system should be testable and regulated. A human impact review must be mandatory in development and transitions.

- Access to AI: The benefits of AI should be made available to all, without creating divides.

- Reliability: AI should not falter and produce unanticipated outcomes. Human control is essential to ensure this.

- Transparency: It is imperative that Information is made available to all stakeholders in any AI enabled system or network, of its way of operation and decision-making.

- Accountability: Accountability with respect to AI safety and responsibility needs to be assigned to people, corporations or relevant governing bodies who design, manage and operate AI-enabled systems (Twomey, 2020).

- Protection and safeguarding of data: The need to ensure that AI doesn’t inadvertently disclose sensitive or private information and that there are no security vulnerabilities inherent in AI systems.

At the Extraordinary G20 Digital Economy Ministerial Meeting, South Korea expressed views on Covid-19 response experience, emphasizing the relevance and promising role of digital technologies in accelerating pandemic response, as well as its ability to mitigate future crises. G20 countries can achieve greater success by sharing policy interventions, practices, lessons, and solutions that developed in COVID response (Minister Choi Shared Korea’s COVID-19 Response Experience at Extraordinary G20 Digital Economy Ministerial Meeting, n.d.).

As AI grows, the G20 nations needs to set a standard for uniform practice that the world can follow—to regulate the AI as well as promote responsible growth of AI within the global economy (Minister Choi Shared Korea’s COVID-19 Response Experience at Extraordinary G20 Digital Economy Ministerial Meeting, n.d.).

Developing globally standardized AI regulating policies:

In the post-pandemic economic space, a globally standardized AI regulating policy framework would not only bring confidence and trust among the public but also guide business leaders, data scientists and developers toward responsible outcomes. It is important that partnering nations align with a common vision and consider all challenges global. AI has been a great help during the Covid-19 pandemic by assisting vaccine producers with AI algorithms that helped doctors to rapidly test and roll-out mRNA vaccines. It was not only a leap toward the future but also a step toward sustaining the progress towards SDG 3 of maintaining sufficient healthcare for all. AI will continue to be a key player in achieving SDG goals and regional challenges must not affect the implementation of AI-enable systems, as this limits the possibilities that AI has to offer. Consensus building is vital in coming together with mutually acceptable principles, boundaries and implementing systems—as the guidelines must not follow an ivory tower approach—or anything far from on-ground operational realities. Here, the guiding principle remains that the chain is as strong as the weakest link. The technology is for benefit for all, otherwise the investments on the solutions may turn less efficient, bottlenecked, or futile.

Responsible growth and economic integration of AI technologies in the post-pandemic era:

Continuous research, innovation, monitoring and feedback mechanisms are inevitable in building and making the technology flourish to everyone’s benefit. Even if developing countries of the G20 community are not themselves able to afford the research and development on AI development, the R&D done by the developed economies and the regulations set up by the whole community would greatly assist the developing countries of G20 and beyond to benefit from AI in economic growth and improving world trade. For instance, green technology has come a great way by creating sustainable growth in environment friendly technologies positively impacting SDG 13 (climate change). AI has been a great contributor in these sectors be it in electric cars or even in carbon capture technologies. Mutual cooperation and inter-regional economic integration will be helpful to develop the most productive and creative systems, given implementation prioritises long-term vision over short-term goals for all countries alike.

Digital Inclusiveness for the growing AI industry:

The growth of the AI industry has been rapid, therefore necessary infrastructure is insufficient to practice AI in many places around the world—especially the developing countries and the LDCs—which risks exacerbating the existing digital divide. The G20 leadership must support building required infrastructure for these nations to improve their national AI policies and infrastructures. AI-based digital infrastructure has the potential to reach people of all levels reducing inequalities and contributing toward SDG 10 (Reducing Inequalities).

Furthering telecommunication networks and digital infrastructure by making them secure and resilient in underserved regions and communities, health provision, and research environments is important. A robust infrastructure proves highly valuable for networking in crises. Digital technologies and products go a long way in supporting economic and social activity, in many fields such as education, work or medical care, in crisis situations. Access to such products will narrow the digital divide. Minimising digital divide will require a new approach of transcending boundaries and enduring and implementing necessary investments and infrastructure in place.

Developing International Standards for Inclusion:

To reap most benefits of AI, collective efforts on a global scale will be most effective, extending benefits to all while mitigating its negative effects. Establishing and implementing international standards and making open-source software available will act as a common platform and means for different parties to collaborate in exploring the possibilities of AI. Representation of relevant stakeholders will ensure that datasets, systems, and processes will reflect accuracy and inclusion in the outcome. Guidelines must be established after multi-level review from multiple governing bodies to make the systems as impeccable as possible yet also sufficiently flexible so that it can be continuously improved following periodic reviews into the system. A widely accepted standard for AI regulation would be an international collaboration of great size—positively contributing towards SDG 17 (Global Partnership).

Safeguards for legal, ethical, private, and secure use of AI and Big Data:

Human rights must be safeguarded by regulating and enforcing protective algorithms and flows to protect privacy and ethical concerns. Apart from processes, data collection, pooling, processing, and sharing must be in line with all ethical and legal obligations. Systems must be aware and compliant with regional and international laws, over and above the fundamental safeguards to ensure non-discrimination and no-harm to human beings with respect to, but not limited to, their identity, finances, career, safety, and health. Importance must be given to datasets that are being used and for what purposes—as what you feed the entity decides the outcomes. A strong human support is necessary to decide, review and sort datasets for receiving desirable outcomes and ensuring negative outcomes remain negligible.

Eliminate Bias in AI:

AI based technologies amplify human biases at scale. The training data used to train AI models should represent diverse perspectives to be free of bias. Regulations should be enacted to conduct bias audit of AI applications, especially where the bias can have an adverse effect. We must prevent any discrimination including that which is based on race, color, national or ethnic origin, religion, sex, sexual orientation, disability, gender identity, or gender expression. For instance, automated employment decision tools to screen job candidates must be audited for bias. Similarly, AI in social media can amplify negative biases and reach billions of users, therefore must be audited for biases regularly.

Reliability:

Online platforms are susceptible to cyber-attacks or malicious activities that threaten digital governance and economy. Multi-stakeholder collaboration amongst digital platforms should work in tandem to dissipate misinformation, data theft, hoaxes, and online scams. A mechanism to avert such situations as well as damage control must be established to ensure that online platforms are safe, robust, and resilient. A system that is vulnerable to attacks is not reliable and is hence inefficient.

Transparency:

Even if a system is developed to be resistant to attacks, it should still be transparent. Transparency is crucial in informed decision-making, whether it is for legal, medical, or policy considerations. Understanding AI’s processes to arrive at inferences is essential, as one wrong assumption can lead to misinformed decisions. Human validation will ensure that correct inferences are drawn. Ensuring transparency will also aid in facilitating course correction and fine-tuning of AI’s processes. It is the AI’s quick computational ability that supports human efforts, but human power to question always holds more power (Challenges and opportunities of Artificial Intelligence for Good, n.d.).

Privacy Laws:

AI algorithms are built using consumer data, which brings the inherent risk of the leaking of that very sensitive consumer data. Standardized global and local laws are important to protect the consumers. These laws should mandate organizations to provide notice to consumers about how their information will be used. Additionally, audits should be conducted to ensure the AI algorithms are not leaking sensitive consumer data in any way.

Human Oversee for High-Risk AI Systems:

High-risk AI systems include those used for remote biometric identification systems, safety in critical infrastructure, educational or employment purposes, eligibility for public benefits, credit scoring, and dispatching emergency services. Some AI systems used for law enforcement, immigration control, and the administration of justice are also deemed high risk. High-risk AI systems must be designed to allow users to “oversee” them in order to prevent or minimize “potential risks.” Design features should enable human users to avoid overreliance on system outputs (“automation bias”) and must allow a designated human overseer to override system outputs and to use a “stop” button.

Conclusion:

With a wide range of challenges surrounding the evolving use of Artificial Intelligence, it is quite crucial for nations worldwide to agree on a set of regulations that serves as a check to the rapidly progressing AI uses in industries and even at consumer level—especially by big tech companies. A standardized AI regulation across the G20 community and beyond would not only help regulate AI uses and prevent unwanted security breaches but also serve as a structure for AI developers to follow. Regulations should promote system transparency and ensure privacy to gain confidence from users on the wider use of the AI. Most importantly, enabling the human eyes into AI regulation would increase the reliability and confidence on advanced technologies like AI.

References

Abdoullaev, A. (2021, September 7). Whar are the biggest challenges in Artificial Intelligence and how to solve them? Retrieved from BBN Times: https://www.bbntimes.com/technology/what-are-the-biggest-challenges-in-artificial-intelligence-and-how-to-solve-them

Acemoglu, D., & Restrepo, P. (2018, January). Artificial Intelligence, Automation and Work. Retrieved from National Bureau of Economic Research, Cambridge: https://www.nber.org/system/files/working_papers/w24196/w24196.pdf

AI Seen Gaining in Post-Pandemic Era. (2021, September 13). Retrieved from OM Sterling Global University: https://www.osgu.ac.in/blog/ai-seen-gaining-in-post-pandemic-era/

An AI Action Plan for All Australians. (2020). Retrieved from Department of Industry, Science, Energy, and Resources, Australian Government: https://consult.industry.gov.au/digital-economy/ai-action-plan/supporting_documents/AIDiscussionPaper.pdf.

Box, S. (2020, July 24). How G20 countries are working to support trustworthy AI. Retrieved from OECD Innovation Blog: https://oecd-innovation-blog.com/2020/07/24/g20-artificial-intelligence-ai-principles-oecd-report/

Cervantes, E. (2021, June 28). What is deepfake? Should you be worried? Retrieved from Android Authority: https://www.androidauthority.com/what-is-deepfake-1023993/

Challenges and opportunities of Artificial Intelligence for Good. (n.d.). Retrieved from AI for Good: https://aiforgood.itu.int/challenges-and-opportunities-of-artificial-intelligence-for-good/

Challenges of AI. (2020, January 21). Retrieved from 3 Pillar Global: https://www.3pillarglobal.com/insights/artificial-intelligence-challenges/

Digitalisation and Innovation. (n.d.). Retrieved from oecd.org: https://www.oecd.org/g20/topics/digitalisation-and-innovation/

Gordon, C. (2022, January 16). AI Innovations Accelerated By Government And Business Leadership Fight Against COVID-19. Retrieved from Forbes: https://www.forbes.com/sites/cindygordon/2022/01/16/ai-innovations-accelerated-by-government-and-business-leadership-fight-against-covid-19/?sh=541705fd7454

How a Canadian start-up used AI to track China virus. (2020, February 20). Retrieved from Adatum: https://economictimes.indiatimes.com/tech/ites/how-a-canadian-start-up-used-ai-to-track-china-virus/how-did-it-do-it/slideshow/74223031.cms

Minister Choi Shared Korea’s COVID-19 Response Experience at Extraordinary G20 Digital Economy Ministerial Meeting. (n.d.). Retrieved from Ministry of Science and ICT I-Korea: https://www.msit.go.kr/eng/bbs/view.do?sCode=eng&mId=4&mPid=2&pageIndex=&bbsSeqNo=42&nttSeqNo=431&searchOpt=&searchTxt=

Reilly, J. (2021, July 17). How Can AI Help in Achieving the UN’s Sustainable Development Goals? Retrieved from Akkio: https://www.akkio.com/post/how-can-ai-help-in-achieving-the-uns-sustainable-development-goals#:~:text=A%20new%20study%20published%20in,SDG%2011%20on%20sustainable%20cities.

Toplic, L. (2020, October 8). AI in the Humanitarian Sector. Retrieved from Reliefweb: https://reliefweb.int/report/world/ai-humanitarian-sector

Twomey, P. (2020, December 10). Towards a G20 Framework For Artificial Intelligence in the Workplace. Retrieved from G20 Insights: https://www.g20-insights.org/policy_briefs/building-on-the-hamburg-statement-and-the-g20-roadmap-for-digitalization-towards-a-g20-framework-for-artificial-intelligence-in-the-workplace/

Using artificial intelligence to help combat COVID-19. (2020, April 23). Retrieved from oecd.org: https://www.oecd.org/coronavirus/policy-responses/using-artificial-intelligence-to-help-combat-covid-19-ae4c5c21/

Vinuesa, R., Azizpour, H., Leite, I., Balaam, M., Dignum, V., Domisch, S., . . . Nerini, F. F. (2020, January 13). The role of artificial intelligence in achieving the Sustainable Development Goals. Retrieved from Nature Communications: https://www.nature.com/articles/s41467-019-14108-y

Yuyao, L. (2020, November 20). Graphics: How does G20 use tech in joint fight against COVID-19? Retrieved from CGTN: https://news.cgtn.com/news/2020-11-20/Graphics-How-does-G20-use-tech-in-joint-fight-against-COVID-19–VzEbBU5Esw/index.html