While recent development in AI healthcare provides better solutions in saving human life with utmost accuracy in an economical way, the process of creating AI technology solutions should be conducted ethically. To that end, this policy brief proposes a framework to accommodate the rapid but careful adoption of AI technology in the healthcare industry. As we identify distinct existing governing systems, we strongly suggest that a federated system is an ideal environment for health AI development. An integrated general roadmap should also be constructed to help nations formulating strategies in developing AI healthcare adaptively.

Challenge

Healthcare in general is a heavily regulated industry; however, recent development in artificial intelligence (AI) technology pushes the boundary to adopt AI healthcare solutions more rapidly due to its ability to save human life with utmost accuracy in an economical way. Several issues faced by the healthcare industry which could be solved by AI technology are cost, accuracy, and individual holistic healthcare systems. The advanced AI technology helps doctors diagnose patients’ diseases more accurately, and thus provide measured and personalized medical treatment. This process significantly reduces the cost of human effort, leading to lower medical and pharmaceutical prices. Patients also have access to their data and analytics; therefore, they can better understand the complex relations between symptoms and medicine that form the aspects of their health status. At the same time, transparency allows patients to be aware of their diagnosis and treatment involving AI technology.

As the recent developments in AI healthcare can provide better solutions and less inexpensive treatments, the process of creating AI technology solutions should be conducted ethically. On the technical side, AI technology could face significant problems surrounding bias, ethics, reliability, distributed point of care, scalability treatment, interoperability data between doctor-hospital-pharmaceutical laboratories, and prevention programs. The policy should address these concerns, including: how to support the mechanism for the data collection so as the data is free from bias/discrimination; protect privacy; help clinical tests while complying to codes of conduct; optimize points of care to ensure each region has equal access. On the non-technical side, the focus should be on creating a policy to support the acceleration of technology usage in helping reduce healthcare costs and increase access to healthcare services. This will need intensive collaboration between various stakeholders.

We seek to identify the primary problems and design the policy to accelerate adoption and reduce frictions. Most current healthcare policy relies on healthcare coverage and those associated with the underlying cost of health care. Little has the policy to guide and give room to AI technology in the healthcare industry.

G20 consists of nations with various governmental systems and structures. As the proposed framework is based on the general view of healthcare and the development environment, it may be well-suited to be implemented in any nation with some proper adjustment regardless of political views or ideology. Each country should have a community-driven regulatory framework in which decisions about ethics and governance of AI development can be formed in consideration of every stakeholder in the healthcare system

Proposal

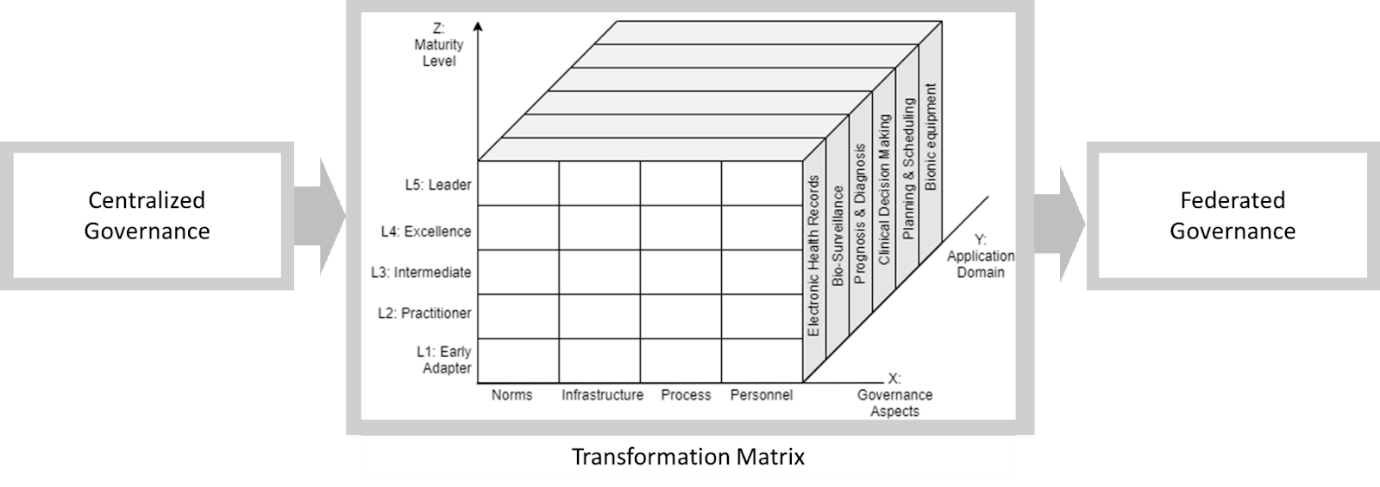

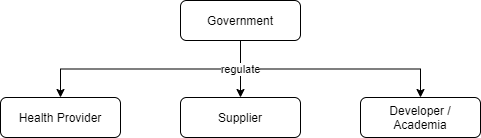

Figure 1. The transition framework from centralized to federated governance via Measurement Matrix

To address the aforementioned challenges, a comprehensive framework is needed to ensure each level of problem is properly taken into account. The main concept of governance transition from centralized to federated is to encourage and generate new innovation to support better AI ethics empowerment. By opening the opportunities to different stakeholders such as healthcare industry, academia, and communities, we could hope for the enrichment of data collection effort and AI ethics consensus. Data could become easy and ubiquitous by allowing the stakeholders to share on curated platforms. The effort of AI model construction becomes less cumbersome and only focuses on AI model construction based on unbiased data. Thus, we propose a transformation indicator by which each aspect of AI healthcare is managed and monitored.

Technical System

On the technical side, we acknowledge that different communities, such as doctors and academia, play important and specific roles on the issue. Intense and proper communication and coordination between each community are required. We propose two distinct paradigms in which coordination between stakeholders is shaped (i.e., centralized and federated).

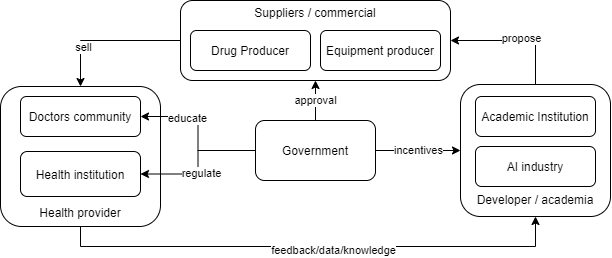

Figure 2. The schema of centralized paradigm.

Centralized paradigm, as shown in Figure 2, is a top-down coordination paradigm which relies much on government direct supervision, whereas the federated paradigm is quite the opposite. In the detailed perspective of this paradigm, a well-functioning framework in which each stakeholder plays its respective role properly must be formed to promote the controlled acceleration of AI development

Figure 3. The detailed governance framework of centralized paradigm for AI adoption in healthcare.

Health provider is the end-user of AI invention, as well as knowledge and data provider for academia or researcher to develop proper AI invention. In doing so, health providers should have proper knowledge of every weakness of AI. The Government in the center plays many crucial roles in education, development incentives, and product approval of AI inventions.

However, there are significant challenges in the centralized model, where initiatives are triggered by government action. As we can see in Figure 3, the government initiates the incentives to academia, conducts regulation for the healthcare community, increases public awareness, and gives approval to the industrial standard healthcare practice and medicine. The model will rely heavily on government responsiveness in responding to the public demand for operational efficiency. In developing countries, we see the centralized model dominate the practice; stakeholders find difficulty adjusting technological advancement pace to solve data scalability; thus, the ideal AI model ethic-based construction is hard to achieve.

Centralized paradigm has its own disadvantages. We propose that the ideal environment for AI healthcare development is a federated one, as the coordination can be adaptive and flexible. For the federated system, a self-regulatory integrated community, consisting of representatives of each community, has to be formed as a community-driven regulator on the ethics of AI implementation in healthcare. This community may be formed bottom-up by technical actors from each community or even top-down induction by Government. This scheme is illustrated in Figure 4.

Figure 4. Self-regulatory integrated community is formed bottom-up by the surrounding actors in a federated paradigm

In contrast with the centralized paradigm, the governance framework for a federated system has every stakeholder plays its respective role without direct government control. Instead, the integrated community will stand in between as mediator or moderator between stakeholders. Collective roles of government in a centralized paradigm, which include approving, regulating, educating, and promoting AI, is distributed properly to every stakeholder in a way that responsibility balance is reached. In this paradigm, stakeholders are encouraged to be flexible in incorporating benefits and risks of AI technology, as it is one of the important issues that should be considered in the context of AI in healthcare (Rigby, 2019). This framework is shown in Figure 5.

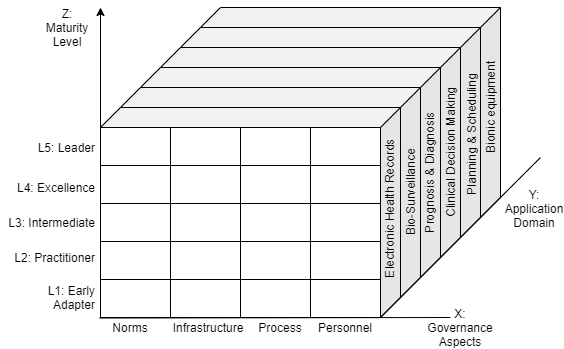

Figure 6. Measurement Matrix to asses AI development governance in healthcare extended from Casalone framework (2021)

Once the framework of AI governance is settled, carefully planned roadmaps should be formulated to determine strategic steps to achieve maturity in AI healthcare. To that end, we propose a transformation matrix as a strategic indicator in paradigm transition to a mature federated AI governance (Figure 6). Development of AI healthcare should take into account some governance aspects to ensure potentially significant problems can be avoided. There are at least 4 aspects to consider: (1) norms, which constitutes ethical and principal component such as transparency, explainability, justice/equality, safety, resilience, accountability, and human autonomy; (2) infrastructure, which constitutes industrial, economical, and political support in forming robust development environment; (3) process, which constitutes technical component such as data management, business process, and model development; (4) personnel, which constitutes the quality of human resources in all involved sectors such as medical assistant, technician, doctors, and AI developer itself. The norms component is the most crucial as discussed in many studies and reports (Reddy, 2019; Gerke et al., 2020; Google, 2019; Muller et al., 2021; WHO, 2021; Gill et al., 2021; Balagurunathan et al., 2021; KPMG, 2021). Choudury (2019) proposed alternative aspects that can be included in the norms component, such as meaningful outcome, interoperability, and generalizability. These aspects can be added or removed following condition, standard, and state in respective countries, in the spirit of balancing benefits and risks of AI technology (Rigby, 2019).

In an overview, each of these aspects may pose different problems and dynamics in different contexts of application. It is thus recommended to divide the AI application in healthcare into particular domains. This division will help detailed mapping of development progress. These domains, which we adopt and modify from Hadley, 2020, are: (1) Electronic health records; (2) Bio-surveillance; (3) Prognosis & Diagnosis; (4) Clinical Decision Making; (5) Planning & Scheduling; and (6) Bionic Equipment. Each of these domains may use one or more of the AI technologies, such as machine learning, intelligence robot, image recognition, and/or expert systems (Liu, 2020). The way each governance aspect is developed in each domain may vary, making application domain another dimension of consideration in roadmap formulation.

Combining these two dimensions (i.e., governance aspect and application domain), we can formulate leveling metrics as control mechanisms in managing development performance. Adopting from Casalone et al. (2021) we take 5 levels of maturity as key checkpoints in the roadmap (i.e., early adopter, practitioner, intermediate, excellence, and leader). These levels may have correlation to implementation layers formulated by Gasser et al. (2017), in which they formulate 3 layers to be overcome in AI implementation: technical layer (e.g., algorithms and data), ethical layer, and sociallegal layer. In this context, level 1 and level 2 maturity states—early adopter and practitioner—are focusing on the technical layer, level 3 maturity state—intermediate—is focusing on the ethical layer, while the highest two states—excellence and leading—are focusing on the social-legal layer. That being said, we can draw a three-dimensional matrix as a roadmap guideline, with the governance aspect and application domain as base consideration and maturity level in the vertical axis as success metrics.

Conclusion

The advancement of AI technology promotes AI-based healthcare solutions to solve issues in healthcare industries such as cost, accuracy, and individual holistic healthcare systems. However, the adoption of AI technology introduces new challenges in ensuring that the process of creating AI technology solutions is conducted ethically. To this end, this policy brief provides a classification of existing healthcare industry governing systems, and then proposes the federated system to be the ideal. To guide countries formulating strategies in developing AI healthcare, an integrated general roadmap should also be constructed.

References

Y. Balagurunathan, R. Mitchell, and I. El Naqa, “Requirements and reliability of AI in the medical context,” Phys. Medica, vol. 83, no. February, pp. 72–78, 2021, doi: 10.1016/j.ejmp.2021.02.024.

C. Casalone, et al. “Human-Centric AI: from principles to actionable and shared policies,” T20 Policy Brief. 2021.

A. Choudhury, “A framework for safeguarding artificial intelligence systems within healthcare,” Br. J. Heal. Care Manag., vol. 25, no. 8, 2019, doi: 10.12968/bjhc.2019.0066.

S. Gerke, T. Minssen, and G. Cohen, Ethical and legal challenges of artificial intelligence-driven healthcare, no. January. 2020.

T. D. Hadley, R. W. Pettit, T. Malik, A. A. Khoei, and H. M. Salihu, “Artificial Intelligence in Global Health — A Framework and Strategy for Adoption and Sustainability,” Int. J. Matern. Child Heal. AIDS, vol. 9, no. 1, pp. 121–127, 2020, doi: 10.21106/ijma.296. R. Liu, Y. Rong, and Z. Peng, “A review of medical artificial intelligence,” Glob. Heal. J., vol. 4, no. 2, pp. 42–45, 2020, doi: 10.1016/j.glohj.2020.04.002.

H. Muller, M. T. Mayrhofer, E. Ben Van Veen, and A. Holzinger, “The Ten Commandments of Ethical Medical AI,” Computer (Long. Beach. Calif)., vol. 54, no. 7, pp. 119–123, 2021, doi: 10.1109/MC.2021.3074263.

S. Reddy, S. Allan, S. Coghlan, and P. Cooper, “A governance model for the application of AI in healthcare,” J. Am. Med. Informatics Assoc., vol. 27, no. 3, pp. 491–497, 2020, doi: 10.1093/jamia/ocz192.

M. J. Rigby, “Ethical dimensions of using artificial intelligence in health care,” AMA J. Ethics, vol. 21, no. 2, pp. 121–124, 2019, doi: 10.1001/amajethics.2019.121.

A. S. Gill and S. Germann, “Conceptual and normative approaches to AI governance for a global digital ecosystem supportive of the UN Sustainable Development Goals (SDGs),” AI Ethics, no. 0123456789, 2021, doi: 10.1007/s43681-021-00058-z.

WHO, “Ethics and governance of artificial intelligence for health: WHO guidance”. Geneva: World Health Organization; 2021. Licence: CC BY-NC-SA 3.0 IGO

Google, “Perspectives on Issues in AI Governance,” p. 34, 2019, [Online]. Available https://ai.google/static/documents/perspectives-on-issues-in-ai-governance.pdf.

KPMG, “The shape of AI governance to come,” p. 15, 2021, [Online]. Available: https://assets.kpmg/content/dam/kpmg/xx/pdf/2021/01/the-shape-of-ai-governance-tocome.pdf